Who’s hacking whom?

Who is hacking whom? The case of Brian Farrell (a.k.a. “Doctor Clu”) raises a host of interesting questions about the nature of hacking, vulnerability disclosure, the law, and the status of security research. Doctor Clu was brought to trial by FBI agents who identified him by his Internet Protocol (IP) address. But Clu was using Tor (The Onion Router) to hide his identity, so the FBI had to find a way to “hack” the system to reveal his identity. They didn’t do this directly, though. Allegedly, they subpoenaed some information security researchers at Carnegie Mellon University’s Software Engineering Institute (SEI) for a list of IP addresses. Why did SEI have the IP addresses? Ironically, these Department of Defense-funded researchers had bragged about a presentation they would give at the Black Hat security conference on de-anonymising Tor users “on a budget.” For whatever reason, they had Clu’s IP address as a result of their work, and the FBI managed to get it from them. Clu’s defense team tried to find out how exactly it was obtained and argued that this was a violation of the 4th amendment, but the judge refused: IP addresses are public, he said; even on Tor, where users have no ‘expectation of privacy.’

In this case, security researchers ‘hacked’ Tor in a technical sense; but the FBI also hacked the researchers in a legal sense – by subpoenaing the exploit and its results in order to bring Clu to trial. As in the recent WannaCry ransomware attack, or the Apple iPhone vs. FBI San Bernardino terrorism investigation of summer 2016, this case reveals the entanglement of security research, the hoarding of exploits and vulnerabilities, the use of those tools by law enforcement and spy agencies, and ultimately citizens’ right to privacy online. The rest of this piece explores this entanglement, and asks: what are the politics of disclosing vulnerabilities? What new risks and changed expectations exist in a world where it is not clear who is hacking whom? What responsibilities do researchers have to protect their subjects and what expectations do Tor users have to be protected from such research?

“Tor’s motivation for three hops is Anonymity”[1]

“Tor is a low-latency anonymity-preserving network that enables its users to protect their privacy online” and enables “anonymous communication” (AlSabah et al., 2012: 73). The Tor p2p network is a mesh of proxy servers where the data is bounced through relays, or nodes. As of this writing, more than 7,000 relays enable the transferral of data, applying “onion routing” as a tactic for anonymity (Spitters et al., 2014).[2] Onion routing was first developed and designed by the US Naval Research Laboratory in order to secure online intelligence activities. Data is sent using Tor through a proxy configuration (3 relays: entry, middle, exit) adding a layer of encryption at every node whilst decrypting the data at every “hop” and forwarding it to the next onion router. In this way, the “clear text” does not appear at the same time and thereby hides the IP address, masking the identity of the user and providing anonymity. At the end of a browsing session the user history is deleted along with the HTTP cookie. Moreover, the greater the number of people using Tor, the higher the anonymity level for users who are connected to the p2p network; volunteers around the world provide servers and enable the Tor traffic to flow.

There is also controversy surrounding the Tor network, connecting it to the so-called “Dark Net” and its “hidden services” that range from the selling of illegal drugs, weapons, and child pornography to sites of anarchism, hacktivism, and politics (Spitters et al., 2014: 1). All of this has increased the risks involved in using Tor. As shown in numerous studies (AlSabah et al., 2012, Spitters et al., 2014, Çalışkan et al., 2015, Winter et al., 2014 and Biryukov et al., 2013), different actors have compromised the Tor network, cracking its anonymity. These actors potentially include the NSA, authoritarian governments worldwide, and multinational corporations: all organisations that would like to discover the identity of users and their personal information (see for example, the case of Hacking Team).[3] Specifically, it should not be discounted that Tor exit node operators have access to the traffic going through their exit nodes, whoever they are (Çalışkan et al., 2015: 29). Besides governmental actors in the security industries, activists, dissidents and whistle-blowers using Tor, there are also academics that carry out research attempting to “hack” Tor.

The Researchers’ Ethical Dilemma

In January 2015, Brian Farrell aka “Doctor Clu,” was arrested and charged with one count of conspiracy to distribute illegal “hard” drugs such as cocaine, methamphetamine and heroin at a “hidden service” marketplace (Silk Road 2.0) on the so-called “Dark Net”(Geuss 2015).[4] His IP address (along with other users) was purportedly captured in early 2014 by researchers, Alexander Volynkin and Michael McCord, when they were carrying out their empirical study at SEI, a non-profit organisation at Carnegie Mellon University (CMU) in Pittsburgh, U.S.A. The SEI researchers were supposedly able to bypass security and with their hack, obtain around 1000 IP addresses of users.

Since the beginning of 2014, an unnamed source had been giving authorities the IP address of those who accessed this specific part of the site (Vinton 2015).

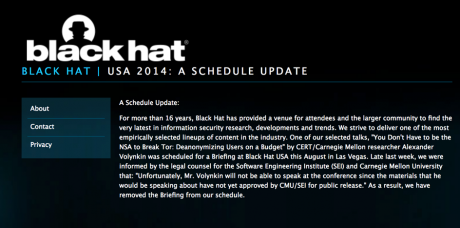

The researchers from SEI at CMU were invited to present their methods and findings on how to “de-anonymize hundreds of thousands of Tor clients and thousands of hidden services” at the Black Hat security conference in July 2014, but they never showed up and the reason of their cancellation is still posted on the website (Figure 1).

Figure 1: Black Hat 2014 website Schedule Update (link.)

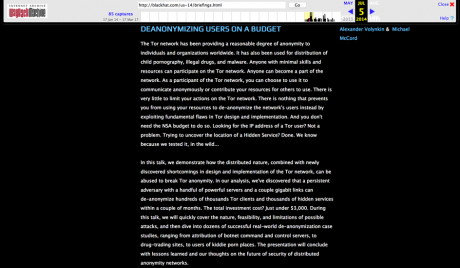

As the next screenshot of the Internet Archive’s Way Back Machine reflects (Figure 2), the researcher’s abstract elucidated their braggadocio of a low budget exploit of Tor for around $3000, as well as a call out to others to try:

Looking for the IP address of a Tor user? Not a problem. Trying to uncover the location of a Hidden Service? Done. We know because we tested it, in the wild…. (Volynkin 2014).

Figure 2: Black Hat 2014 Briefings (link).

With regard to ethical research considerations, the researchers’ “anonymous subjects” didn’t realize or know they were participating in a study-cum-hack. Many in the security research community regard this as an infringement of ethical standards included in the IEEE Code of Ethics that prohibits “injuring others, their property, reputation, or employment by false or malicious action” (IEEE n.D.: section 2.4.2). Even when following such an officially recognized IEEE ethical code, “failure, discovery, and unintended or collateral consequences of success” (Greenwald et. al. 2008:78) could potentially harm “objects of study”– in this case the visitors to the Silk Road 2.0. The Dark Net is perhaps trickier than other fields but there are also academics carrying out research there, contacting users, building their trust and protecting their sources.[5] Supposedly SEI started hosting part of Tor’s relays, but intentionally set up “malicious actors” so that they could carry out their research. According to one anonymous source reported at Motherboard, SEI

had the ability to deanonymize a new Tor hidden service in less than two weeks. Existing hidden services required upwards of a month, maybe even two months. The trick is that you have to get your attacking Tor nodes into a privileged position in the Tor network, and this is easier for new hidden services than for existing hidden services (Cox 2015).

It is crucial that the Tor Project is always informed of the exploit even before it is released so that they can fix potential flaws that enable deanonymization. During the past several years, researchers have continuously shared their data with the Tor Project and reported their findings, such as malicious attacks, or what is called “sniffing” – when the exit relay information is compromised. Once a study is published, patches are developed and Tor improves upon itself as these breaches of security are uncovered. Unlike other empirical studies, the SEI researchers did not inform the Tor Project of their exploits. Instead Tor discovered the exploits and contacted the researchers, who declined to give details. Only after the abstract for Black Hat (late June 2014) was published online did the researchers “give the Tor Project a few hints about the attack but did not reveal details” (Felten 2014). The Tor Project ejected the attacking relays and worked on a fix for all of July 2014, with a software update release at the end of the month, along with an explanation of the attack (Dingledine 2014). As this case shows, not only “malicious actors,” but also certain researchers can collect data on Tor users. According to the Tor Project director Roger Dingledine the SEI researchers acted inappropriately:

Such action is a violation of our trust and basic guidelines for ethical research. We strongly support independent research on our software and network, but this attack crosses the crucial line between research and endangering innocent users (Dingledine 2014).

A Subpoena for Research

In November 2015, the integrity of these two SEI researchers was again brought into question when the rumour circulated that they had been subpoenaed by the FBI to hand over their collated IP addresses. According to an assistant researcher at CMU Nicolas Christin, SEI is a non-profit and not an academic institution and therefore the researchers at SEI are not academics but instead are “focusing specifically on software-related security and engineering issues” and in 2015 the SEI renewed a 5-year governmental contract for 1,73 billion dollars (Lynch 2015). In an official media statement, CMU’s SEI responded by explaining that their mission encompassed searching and identifying “vulnerabilities in software and computing networks so that they may be corrected” (CMU 2015). Important to note is that the US government (specifically the Departments of Defense and of Homeland Security) funds many of these research centers, such as CERT (Computer Emergency Response Team), a division of SEI which has existed ever since the Morris Worm first created a need for such an entity (Kelty 2011). To be precise, it is one of the Federally Funded Research and Development Centers (FFRDC), which are

unique non-profit entities sponsored and funded by the U.S. government that address long-term problems of considerable complexity, analyze technical questions with a high degree of objectivity, and provide creative and cost-effective solutions to government problems (Lynch 2015).

Legally, in the U.S., the FBI, SEC and the DEA can all subpoena researchers to share their research. However, the obtained information was not for public consumption, but for an agency within the U.S. Department of Justice, the FBI. Matt Blaze, a computer scientist at the University of Pennsylvania made the following statement about conducting research:

When you do experiments on a live network and keep the data, that data is a record that can be subpoenaed. As academics, we’re not used to thinking about that. But it can happen, and it did happen (Vitáris 2016).

Besides the ethical questions regarding the researchers handing over their findings to the governments that have supported them (ostensibly with tax-payer money), the politics of security research and vulnerability disclosure continues to be a heated debate within academia and the general public. It seems that issuing subpoenas by law enforcement might provide a means to gather data on citizens and to obtain knowledge of academic research – which then remains hidden from the public. Computer security defense lawyer Tor Ekeland gave this comment:

It seems like they’re trying to subpoena surveillance techniques. They’re trying to acquire intel[ligence] gathering methods under the pretext of an individual criminal investigation (Vitáris 2016).

It is not clear whether the FBI was using a subpoena to acquire exploits, or if the CMU (SEI) researchers were originally hired by the FBI and only later disclosed what happened, stating that they had been subpoenaed?[6] Either way, it would raise the issue of whether the FBI required a search warrant in order to obtain the evidence – the IP addresses.

Internet Search and Seizure

In January 2016, Farrell’s defense filed a motion to compel discovery, in an attempt to understand exactly how the IP address was obtained, as well as the past two-year history of the relationship between the FBI and SEI through working contracts. In February 2016, the Farrell case came to court in Seattle where it was finally revealed to the public that the “university-based research institute” was confirmed to be SEI at CMU, subpoenaed by the FBI (Farivar 2016). The court denied the defense’s motion to compel discovery. This statement from the order—Section II, Analysis—written by US District Judge Richard A. Jones answered the question of whether a search warrant was needed to obtain IP addresses:

SEI’s identification of the defendant’s IP address because of his use of the Tor network did not constitute a search subject to Fourth Amendment scrutiny (Cox 2016).[7]

In order to claim protection under the Fourth Amendment, there needs to be a demonstration of an “expectation of privacy,” which is not subjective but recognized as reasonable by other members of society. Furthermore, Judge Jones claimed that the IP address “even those of Tor users, are public, and that Tor users lack a reasonable expectation of privacy” (Cox 2016).

Again, according to the party’s submissions, such a submission is made despite the understanding communicated by the Tor Project that the Tor network has vulnerabilities and that users might not remain anonymous. Under these circumstances Tor users clearly lack a reasonable expectation of privacy in their IP addresses while using the Tor network. In other words, they take a significant gamble on any real expectation of privacy under these circumstances (Jones 2016:3).

Judge Jones reasoned that Farrell didn’t have a reasonable expectation of privacy because he used Tor; but he also stated that IP addresses are public because he willingly gave his IP address to an Internet Service Provider (ISP), in order to have internet access. Moreover, the citation (precedent) that Judge Jones drew upon to uphold his order, namely, United States v. Forrester, ruled that individuals have no reasonable ‘expectation of privacy’ with internet IP addresses and email addresses:

The Court reaches this conclusion primarily upon reliance on United States v. Forrester, 512 F.2d 500 (9th Cir. 2007). In Forrester, the court clearly enunciated that: Internet users have no expectation of privacy in …the IP address of the websites they visit because they should know that this information is provided to and used by Internet service providers for the specific purpose of directing the routing of information (Jones 2016:2-3).

Trust

In March 2016, Farrell eventually pleaded guilty to one count of conspiracy regarding the distribution of heroin, cocaine and amphetamines in connection with the hidden marketplace Silk Road 2.0 and received an eight-year prison sentence. In this case, the protection of an anonymous IP address was thwarted in various ways (a hack, a subpoena, a ruling) with regard to governmental intrusion. Privacy technologists, such as Christopher Soghoian, have provided testimony in similar cases, explaining that the government states that obtaining IP addresses “isn’t such a big deal,” yet the government can’t seem to elucidate how they could actually obtain them (Kopstein 2016).

“Campfire” XKCD 742

Whoever wanted to know the IP address would have to be in control of many nodes in the Tor network, around the world; and one would have to intercept this traffic and then correlate the entry and exit nodes. Besides the difficulty factor, these correlation techniques cost time and money and these exploits, including the one from the SEI researchers, were possible in 2014. Even if IP addresses are considered public when using Tor, they are anonymous unless they are correlated with a specific individual’s device.[8] To correlate Farrell’s IP address, the FBI had to obtain the list of IP addresses from Farrell’s ISP provider, Comcast.

The judge’s cited reason for denying the motion to compel disclosure was that IP addresses are in and of themselves not private, as people willingly provide them to third parties. Nowadays people increasingly use the internet (and write emails) instead of the telephone; and in order to do so, they must divulge their IP address to an ISP in order to access the internet. When users are outside of the Tor anonymity network, their IP is exposed to an ISP. However, when inside the “closed field” of Tor, is there no expectation of privacy along with the security of the content? And by extension, is there not an expectation of anonymity with the security of users’ identity?

Judge Jones also argued that that Farrell didn’t have an expectation of privacy because he handed over his IP address to strangers running the Tor network.

[I]t is the Court’s understanding that in order for a prospective user to use the Tor network they must disclose information, including their IP addresses, to unknown individuals running Tor nodes, so that their communications can be directed towards their destinations. Under such a system, an individual would necessarily be disclosing his identifying information to complete strangers (Jones 2016:3).

Herewith the notion of trust surfaces and plays a salient role. When people share information with ethnographers, anthropologists, activists or journalists and it takes months, sometimes years to gain people’s trust; and the anonymity of the source often needs to be maintained. These days when people choose to use the Tor network they trust a community that can see the IP address at certain points, and they trust that the Tor exit node operators do not divulge their collected IP addresses nor make correlations. In an era of so-called Big Data, as more user data is collated (by companies, governments and researchers) correlation becomes easier and deanonymization occurs more frequently. With the Farrell case, researchers’ ethical dilemmas, the politics of vulnerability disclosure and law enforcement’s “hacking” of Tor all played a role in obtaining his IP address. Despite opposing judicial rulings, it can be argued that Tor users do have an expectation of privacy whereas the capture of IP addresses for users seeking anonymity online has been expedited.